The Underdog That Shook the AI World

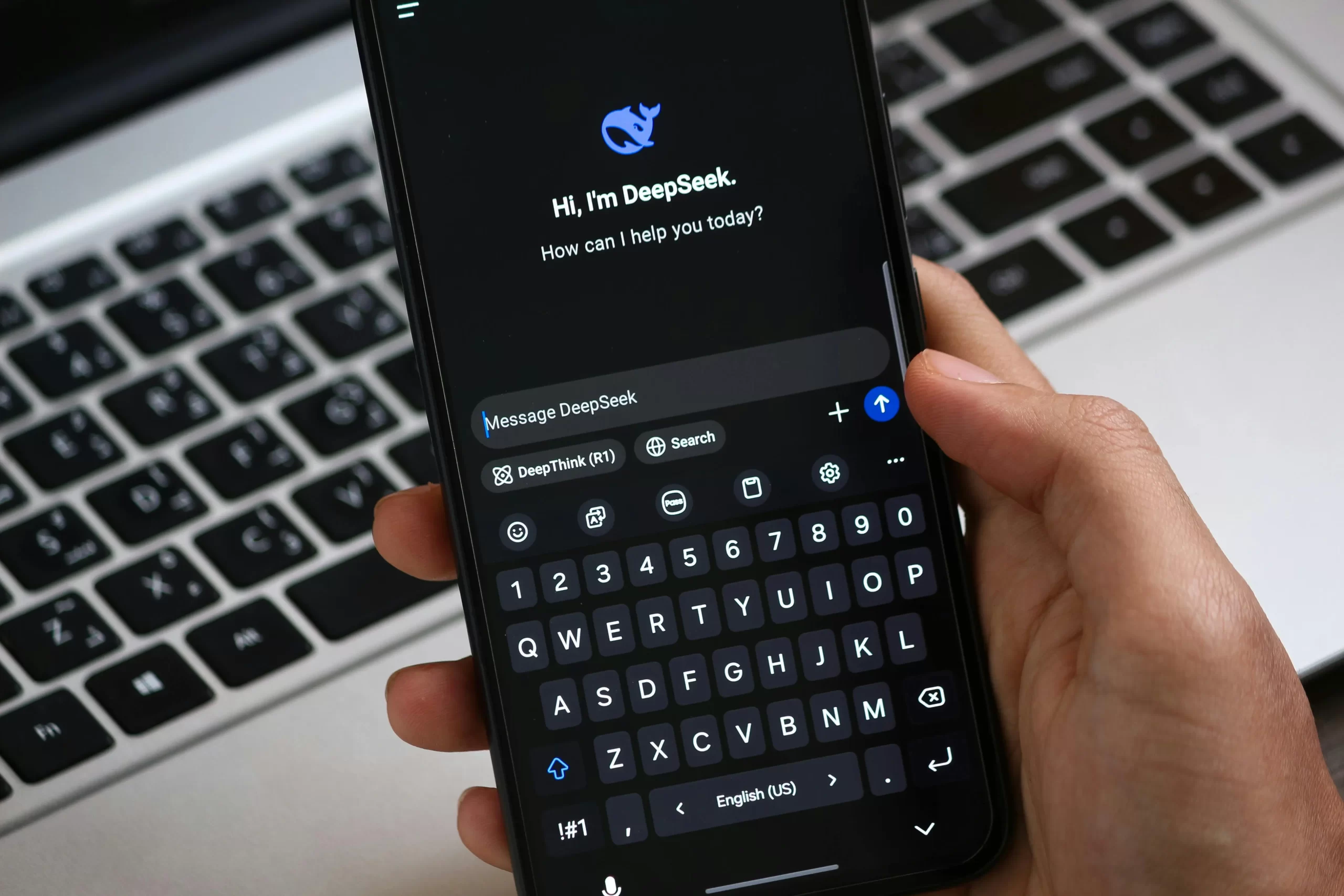

In an industry dominated by tech giants like OpenAI, Google, and Microsoft, a small Chinese startup called DeepSeek pulled off the impossible. Using a technique called AI Distillation, they built a model that could compete with OpenAI’s best—in just two months and for under $6 million.

This isn’t just another tech breakthrough. It’s a game-changer that proves innovation doesn’t always require billions in funding. Instead, smarter optimization and open collaboration can level the playing field.

So, what exactly is AI Distillation, and how did DeepSeek use it to blindside the biggest names in AI? Let’s break it down.

Want a Visual Breakdown? Watch Our Deep Dive

If you prefer video, we’ve covered this story in detail—with animations, expert insights, and real-world implications.

What Is AI Distillation? (And Why It’s a Big Deal)

AI Distillation is a machine learning technique where a smaller, more efficient model learns from a larger, more complex one—without needing to train from scratch. Think of it like a student absorbing a master’s knowledge.

Key Benefits of AI Distillation:

✔ Cost Efficiency – Training massive AI models can cost hundreds of millions. Distillation slashes expenses.

✔ Faster Development – DeepSeek went from an old Alibaba model to an OpenAI rival in two months.

✔ Democratizes AI – Startups and researchers can now build powerful AI without corporate-scale budgets.

The concept was first introduced by Geoffrey Hinton (the “Godfather of AI”) in 2015, but DeepSeek optimized it further, making their models faster and more efficient than the originals they learned from.

How DeepSeek Used AI Distillation to Challenge OpenAI

1. They Started with an Existing Model (Instead of Reinventing the Wheel)

Rather than building from scratch, DeepSeek took Alibaba’s Quinn model and enhanced it—proving that smart iteration beats brute-force development.

2. They Fed It OpenAI’s Knowledge

By analyzing 800,000 outputs from OpenAI’s models, they distilled the best insights into their system—effectively learning from the best.

3. They Achieved Breakthroughs at a Fraction of the Cost

With just $6 million, DeepSeek built a model comparable to those costing 100x more—proving that efficiency can rival raw computational power.

The Open-Source Effect: How DeepSeek Changed the AI Industry

After their success, DeepSeek did something bold: they open-sourced their models. This move:

✅ Challenged Big Tech’s Closed Approach – Unlike OpenAI’s guarded models, DeepSeek made AI accessible.

✅ Spurred a New Wave of Innovation – Researchers at Stanford, UW, and Hugging Face adopted similar methods.

✅ Forced Even OpenAI to Rethink Strategy – Sam Altman admitted they might be “on the wrong side of history.”

This shift suggests that open collaboration could be the future of AI—not just proprietary systems.

The Catch: Distillation Alone Won’t Create AGI

While AI Distillation helps smaller players catch up quickly, companies like OpenAI and Google are still racing toward Artificial General Intelligence (AGI)—AI that can reason like a human.

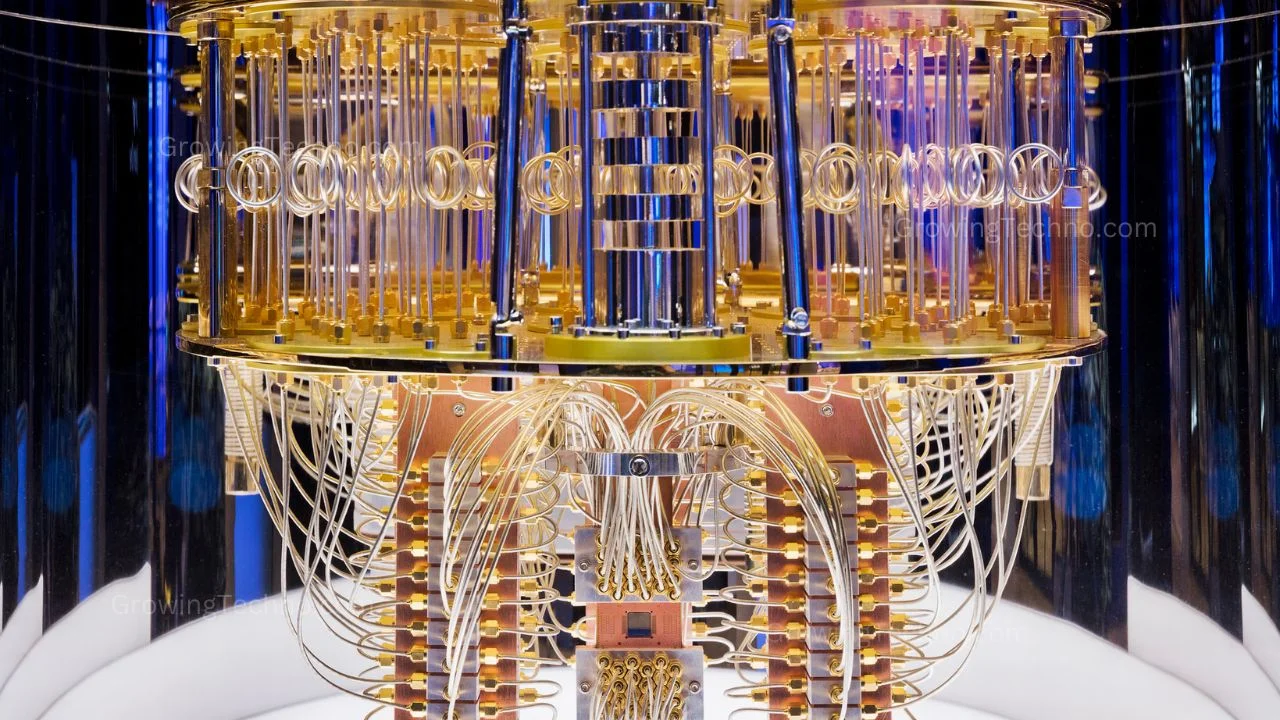

- OpenAI’s $500B Stargate project aims for superintelligence.

- Google DeepMind is pushing boundaries with Gemini and beyond.

But the gap is narrowing. If startups keep refining distillation, they could keep pace without needing billions. The future of AI might belong to the smartest optimizers—not just the richest.

What This Means for the Future of AI

DeepSeek’s success proves that innovation isn’t just about money—it’s about working smarter. Here’s what’s next:

🔹 More Competition – Smaller players can now challenge Big Tech.

🔹 Cheaper, Faster AI Development – Businesses & researchers benefit.

🔹 Shift Toward Efficiency – Raw computing power isn’t the only path to success.

One thing is clear: The AI race just got a lot more interesting.

What do you think? Is open-source the future of AI, or will the big players maintain their edge? 🎥 Subscribe to our YouTube channel: Growing Technology for more insights into this tech revolution